Clinical Trial Data vs Real-World Side Effects: What You Need to Know

Jan, 10 2026

Jan, 10 2026

Side Effect Probability Estimator

Drug Side Effect Calculator

Side Effect Comparison

Clinical Trial Estimate

(Based on controlled study)

{{trialRate}}%

Real-World Estimate

(Based on actual patient experience)

{{realWorldRate}}%

Why the difference? Clinical trials report only common side effects seen during controlled observation. Real-world data captures long-term, rare, and context-specific effects that trials miss.

Important note: Real-world estimates are approximations. Only 2-5% of actual side effects get reported to FDA systems. This tool shows trend differences based on published studies, not actual personal risk.

Key Insights

- Missing Effects in Trials 1 in 1,000

- Real-World Reporting Rate 2-5%

- Typical Time to Detect Years vs. Months

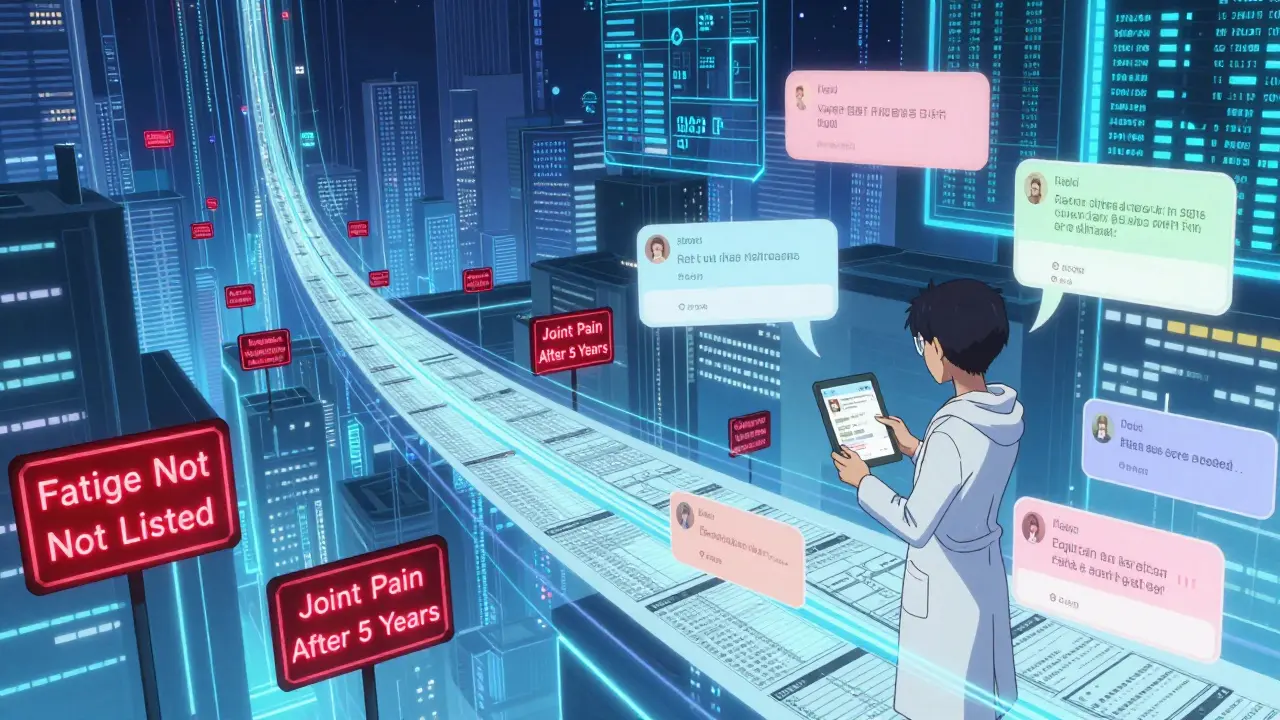

When you pick up a new prescription, the label lists side effects like nausea, dizziness, or fatigue. But here’s the thing: those numbers don’t tell the whole story. The side effects you see on the FDA-approved label come from clinical trial data-a tightly controlled snapshot of what happened in a small group of people over a few months. What happens when millions of real people start taking that drug for years? That’s where real-world side effects come in-and they often reveal things the trials never saw.

How Clinical Trials Really Work

Clinical trials are designed to answer one question: does this drug work under ideal conditions? To do that, they lock down everything else. Participants are carefully selected-you can’t have other diseases, take other meds, or be over a certain age. The trial runs in hospitals or clinics with strict schedules. Side effects are recorded at every visit using a standardized system called CTCAE, which has over 790 specific terms for everything from mild rash to death. But here’s the catch: most phase 3 trials enroll fewer than 500 people. That’s not enough to catch side effects that happen in 1 in 1,000 or even 1 in 10,000 patients. Take rosiglitazone, a diabetes drug approved in 1999. The trials showed it lowered blood sugar. They didn’t show it increased heart attack risk by 43%. That only became clear years later, when doctors started seeing it in real patients. Trials also miss long-term effects. If a drug causes joint pain after five years, you won’t see it in a 12-month trial. And because participants are monitored so closely, minor issues-like fatigue that shows up only at night, or brain fog after dinner-are often overlooked. Patients in trials report side effects during office visits. Real life doesn’t work that way.Real-World Data: Messy, But Honest

Real-world side effect data comes from the chaos of everyday life. It’s pulled from millions of electronic health records, insurance claims, and reports to the FDA’s Adverse Event Reporting System (FAERS). In 2022 alone, FAERS got over 2.1 million reports. That’s up from 1.4 million in 2018. These aren’t controlled studies. They’re snapshots of what happens when a drug hits the street. This is where the real surprises show up. A 2022 survey found that 63% of patients experienced side effects not listed on their drug’s label. Nearly half of those were moderate to severe enough to mess with daily life. Pharmacists on Reddit’s r/Pharmacy thread say 78% of them see discrepancies between trial data and what patients actually report-especially with newer drugs like GLP-1 agonists for weight loss. Patients say they get fatigue, brain fog, or nausea at home, but the trial only asked about it during clinic visits. Real-world data also catches rare events. Fluoroquinolone antibiotics were restricted in 2019 after analysis of 1.2 million patient records revealed disabling tendon and nerve damage. That kind of signal would’ve taken decades to find in a clinical trial. But real-world data isn’t perfect. Only 2-5% of actual side effects get reported to FAERS. Doctors don’t report them because it takes 22 minutes per case-and most don’t have time. And EHRs? Only 34% of recorded side effects have enough detail for regulators to act on. One patient might write “felt weird,” while another says “severe dizziness with blurred vision.” That inconsistency makes it hard to know what’s real and what’s noise.

When Real-World Data Lies

Here’s the tricky part: real-world data can give false alarms. In 2018, a study linked anticholinergic medications (used for allergies, overactive bladder, and depression) to higher dementia risk. It looked solid-until researchers dug deeper. Turns out, people taking those drugs were already more likely to have early dementia symptoms. The medication wasn’t causing it; the disease was causing them to take the drug. That’s called confounding, and it’s everywhere in real-world data. Another problem? Timing. If someone starts a new drug and gets sick a week later, it’s easy to blame the drug. But what if they were already getting sick? Real-world data doesn’t control for that. Clinical trials do. They use randomization and placebos to isolate cause and effect. That’s why the FDA doesn’t ban drugs based on real-world signals alone. They use tools like the Sentinel Initiative, which analyzes 300 million patient records using 17 different statistical methods. Even then, it takes 3-9 months to validate a signal. Twitter spotted early warnings about ivermectin side effects 47 days before FAERS did-but Twitter isn’t a regulatory tool. It’s a rumor mill with data.What Happens When Both Worlds Collide

The FDA doesn’t treat these two data sources as rivals. They’re partners. Clinical trials tell you if a drug works. Real-world data tells you what happens when you let everyone use it. Since 2017, the FDA has required real-world evidence in post-marketing safety plans for nearly all new drugs. In 2022, 67% of approvals included real-world data requirements-up from 29% in 2017. Oncology leads the way: 42% of post-marketing safety studies now use real-world data because cancer patients are often older, sicker, and on multiple drugs-exactly the group excluded from trials. New tools are helping bridge the gap. Apple’s Heart Study tracked nearly 420,000 people using smartwatches to detect irregular heart rhythms. That’s a real-world study with trial-level scale. AI is getting better too. Google Health’s algorithm analyzed 216 million clinical notes and found 23% more drug-side effect links than traditional methods. But experts agree: real-world data won’t replace clinical trials. It complements them. As Dr. Nancy Dreyer from IQVIA put it, “Trials establish initial safety. Real-world data monitors long-term effects.”

Madhav Malhotra

January 12, 2026 AT 10:09Man, I never realized how much gets left out of those drug labels. Back home in India, we just take what the doctor says and hope for the best. But reading this? It’s wild how trials skip over older folks, people with multiple conditions… we’re just not in the lab. Real life ain’t clean.

Jennifer Littler

January 13, 2026 AT 03:53The confounding bias in FAERS data is non-trivial-especially with polypharmacy cohorts. Longitudinal EHR mining requires propensity score matching and time-varying covariates to mitigate selection bias. Otherwise, you’re just correlating noise with pharmaceutical exposure.

Jason Shriner

January 14, 2026 AT 00:10So let me get this straight… the FDA spends billions to prove a drug works… then lets millions of people take it… just to find out it turns your brain to mush or makes your tendons scream? And we’re supposed to be surprised? 🤡

Alfred Schmidt

January 15, 2026 AT 05:36THIS IS WHY PEOPLE DIE! They don't tell you the truth! They test on 500 people and then sell it to 10 million! My aunt took that GLP-1 thing and lost 40 pounds… then started hallucinating at 3 a.m. and no one would listen because it wasn't in the 'trial data'! WHERE IS THE JUSTICE?!?!?!?

Christian Basel

January 16, 2026 AT 03:26Real-world data is just anecdotal noise with a fancy name. If you want real science, stick to RCTs. Everything else is just correlation dressed up like causation.

Matthew Miller

January 17, 2026 AT 14:15Pharma’s been lying for decades. Clinical trials are marketing tools disguised as science. They cherry-pick participants, bury adverse events, and push drugs before they’re safe. The FDA’s just a rubber stamp for profit. Wake up.

Priya Patel

January 18, 2026 AT 21:49OMG I’ve been keeping a journal of my side effects since I started my med-fatigue after lunch, weird dreams, that buzzing in my ears. I showed my doctor and he actually listened! Turns out it’s not ‘just stress’! We need more people doing this!! 💪❤️

Sean Feng

January 19, 2026 AT 10:58So what? People get side effects. That’s life. Stop complaining and take your pills.

Priscilla Kraft

January 20, 2026 AT 22:12This is so important! 🙌 I’m a nurse and I’ve seen patients ignore symptoms because they think ‘it’s not on the label.’ We need to normalize reporting-even small stuff. It helps everyone. And yes, wearables are game-changers. My patient’s Apple Watch caught her atrial fibrillation before she even felt it! ❤️🩺

Sam Davies

January 21, 2026 AT 06:16Oh, how quaint. We now have ‘real-world data’ to compensate for the fact that clinical trials are too expensive to properly conduct. How very… democratic. Next, we’ll let TikTok reviewers approve chemotherapy regimens.

Alex Smith

January 22, 2026 AT 05:22Here’s the thing no one says: trials are designed to get approval. Real-world data is designed to keep people alive. One’s for regulators. The other’s for humans. We’re not talking about the same goal. And yet, we still act like they’re interchangeable. That’s the real problem.

Roshan Joy

January 23, 2026 AT 12:48I’ve been on meds for 8 years now. Two side effects only showed up after year 5. No one warned me. But I started logging everything-time of day, food, stress level. Now I know what triggers the brain fog. Sharing this with my doc changed my whole treatment. Small steps matter. 🙏

Adewumi Gbotemi

January 25, 2026 AT 05:15Back in Nigeria, we don’t have fancy apps or EHRs. But we talk. We tell our neighbors, our cousins, our aunts. If a medicine makes you sick, word gets around. Maybe that’s real-world data too. Simple, but true.

Michael Patterson

January 26, 2026 AT 16:18Look, I’m not saying the system is perfect but you’re oversimplifying this. Real-world data is messy, yes, but that doesn’t mean it’s useless. The problem is people don’t understand stats. They see ‘63% had side effects not on label’ and think EVERYONE is getting hurt. But it’s not 63% of all users-it’s 63% of those who reported. Big difference. Also, typos are everywhere, and that messes with NLP models. Fix that first.